In this blogpost I argue why it's strongly recommended to use ZFS with ECC memory when building a NAS. I would argue that if you do not use ECC memory, it's reasonable to also forgo on ZFS altogether and use any (legacy) file system that suits your needs.

Why ZFS?

Many people consider using ZFS when they are planning to build their own NAS. This is for good reason: ZFS is an excellent choice for a NAS file system. There are many reasons why ZFS is such a fine choice, but the most important one is probably 'data integrity'. Data integrity was one of the primary design goals of ZFS.

ZFS assures that any corrupt data served by the underlying storage system is either detected or - if possible - corrected by using checksums and parity. This is why ZFS is so interesting for NAS builders: it's OK to use inexpensive (consumer) hard drives and solid state drives and not worry about data integrity.

I will not go into the details, but for completeness I will also state that ZFS can make the difference between losing an entire RAID array or just a few files, because of the way it handles read errors as compared to 'legacy' hardware/software RAID solutions.

Understanding ECC memory

ECC memory or Error Correcting Code memory, contains extra parity data so the integrity of the data in memory can be verified and even corrected. ECC memory can correct single bit errors and detect multiple bit errors per word1.

What's most interesting is how a system with ECC memory reacts to bit errors that cannot be corrected. Because it's how a system with ECC memory responds to uncorrectable bit errors that that makes all the difference in the world.

If multiple bits are corrupted within a single word, the CPU will detect the errors, but will not be able to correct them. When the CPU notices that there are uncorrectable bit errors in memory, it will generate an MCE that will be handled by the operating system. In most cases, this will result in a halt2 of the system.

This behaviour will lead to a system crash, but it prevents data corruption. It prevents the bad bits from being processed by the operating system and/or applications where it may wreak havoc.

ECC memory is standard on all server hardware sold by all major vendors like HP, Dell, IBM, Supermicro and so on. This is for good reason, because memory errors are the norm, not the exception.

The question is really why not all computers, including desktop and laptops, use ECC memory instead of non-ECC memory. The most important reason seems to be 'cost'.

It is more expensive to use ECC memory than non-ECC memory. This is not only because ECC memory itself is more expensive. ECC memory requires a motherboard with support for ECC memory, and these motherboards tend to be more expensive as well.

non-ECC Memory is reliable enough that you won't have an issue most of the time. And when it does go wrong, you just blame Microsoft or Apple3. For desktops, the impact of a memory failure is less of an issue than on servers. But remember, your NAS is your own (home) server. There is some evidence that memory errors are abundant4 on desktop systems.

The price difference is small enough not to be relevant for businesses, but for the price-conscious consumer, it is a factor. A system based on ECC memory may cost in the range of $150 - $200 more than a system based on non-ECC memory.

It's up to you if you want to spend this extra money. Why you are advised to do so will be discussed in the next paragraphs.

Why ECC memory is important to ZFS

ZFS trusts the contents of memory blindly. Please note that ZFS has no mechanisms to cope with bad memory. It is similar to every other file system in this regard. Here is a nice paper about ZFS and how it handles corrupt memory (it doesnt!).

In the best case, bad memory corrupts file data and causes a few garbled files. In the worst case, bad memory mangles in-memory ZFS file system (meta) data structures, which may lead to corruption and thus loss of the entire zpool.

It is important to put this into perspective. There is only a practical reason why ECC memory is more important for ZFS as compared to other file systems. Conceptually, ZFS does not require ECC memory any more as any other file system.

Or let Matthew Ahrens, the co-founder of the ZFS project phrase it:

There's nothing special about ZFS that requires/encourages the use of ECC RAM more so than

any other filesystem. If you use UFS, EXT, NTFS, btrfs, etc without ECC RAM, you are just as much at risk as if you used ZFS without ECC RAM. I would simply say: if you love your data, use ECC RAM. Additionally, use a filesystem that checksums your data, such as ZFS.

Now this is the important part. File systems such as NTFS, EXT4, etc have (data recovery) tools that may allow you to rescue your files when things go bad due to bad memory. ZFS does not have such tools, if the pool is corrupt, all data must be considered lost, there is no option for recovery.

So the impact of bad memory can be more devastating on a system with ZFS than on a system with NTFS, EXT4, XFS, etcetera. ZFS may force you to restore your data from backups sooner. Oh by the way, you, make backups right?

I do have a personal concern5. I have nothing to substantiate this, but my thinking is that since ZFS is a way more advanced and complex file system, it may be more susceptible to the adverse effects of bad memory, compared to legacy file systems.

ZFS, ECC memory and data integrity

The main reason for using ZFS over legacy file systems is the ability to assure data integrity. But ZFS is only one piece of the data integrity puzzle. The other part of the puzzle is ECC memory.

ZFS covers the risk of your storage subsystem serving corrupt data. ECC memory covers the risk of corrupt memory. If you leave any of these parts out, you are compromising data integrity.

If you care about data integrity, you need to use ZFS in combination with ECC memory. If you don't care that much about data integrity, it doesn't really matter if you use either ZFS or ECC memory.

Please remember that ZFS was developed to assure data integrity in a corporate IT environment, where data integrity is top priority and ECC-memory in servers is the norm, a fundament, on wich ZFS has been build. ZFS is not some magic pixie dust that protects your data under all circumstances. If its requirements are not met, data integrity is not assured.

ZFS may be free, but data integrity and availability isn't. We spend money on extra hard drives so we can run RAID(Z) and lose one or more hard drives without losing our data. And we have to spend money on ECC-memory, to assure bad memory doesn't have a similar impact.

This is a bit of an appeal to authority and not to data or reason but I think it's still relevant. FreeNAS is a vendor of a NAS solution that uses ZFS as its foundation.

They have this to say about ECC memory:

However if a non-ECC memory module goes haywire, it can cause irreparable damage to your ZFS pool that can cause complete loss of the storage.

...

If it’s imperative that your ZFS based system must always be available, ECC RAM is a requirement. If it’s only some level of annoying (slightly, moderately…) that you need to restore

your ZFS system from backups, non-ECC RAM will fit the bill.

Hopefully your backups won't contain corrupt data. If you make backups of all data in the first place.

Many home NAS builders won't be able to afford to backup all data on their NAS, only the most critical data. For example, if you store a large collection of video files, you may accept the risk that you may have to redownload everything. If you can't accept that risk ECC memory is a must. If you are OK with such a scenario, non-ECC memory is OK and you can save a few bucks. It all depends on your needs.

The risks faced in a business environment don't magically disapear when you apply the same technology at home. The main difference between a business setting and your home is the scale of operation, nothing else. The risks are still relevant and real.

Things break, it's that simple. And although you may not face the same chances of getting affected by it based on the smaller scale at which you operate at home, your NAS is probably not placed in a temperature and humidity controlled server room. As the temperature rises, so does the risk of memory errors6. And remember, memory may develop spontaneous and temporary defects (random bitflips). If your system is powered on 24/7, there is a higher chance that such a thing will happen.

Conclusion

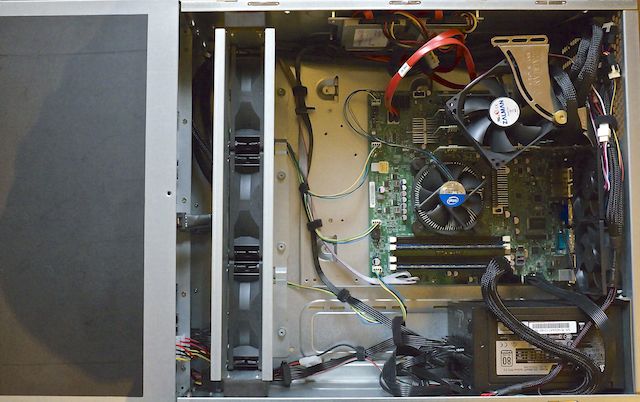

Personally, I think that even for a home NAS, it's best to use ECC memory regardless if you use ZFS. It makes for a more stable hardware platform. If money is a real constraint, it's better to take a look at AMD's offerings then to skip on ECC memory. It's important that if you select AMD hardware, that you make sure that both CPU and motherboard support ECC and that it is reported to be working.

Still, if you decide to use non-ECC memory with ZFS: as long as you are aware of the risks outlined in this blog post and you're OK with that, fine. It's your data and you must decide for yourself what kind of protection and associated cost is reasonable for you.

When people seek advice on their NAS builds, ECC memory should always be recommended. I think that nobody should create the impression that it's 'safe' for home use not to use ECC RAM purely seen from a technical and data integrity standpoint. People must understand that they are taking a risk. But there is a significant chance that they will never experience problems, but there is no guarantee. Do they accept the consequences if it does go wrong?

If data integrity is not that important - because the data itself is not critical - I find it perfectly reasonable that people may decide not to use ECC memory and save a few hundred dollars. In that case, it would also be perfectly reasonable not to use ZFS either, which also may allow them other file system and RAID options that may better suit their particular needs.

Questions and answers

Q: When I bought my non-ECC memory, I ran memtest86+ and no errors were found, even after a burn-in tests. So I think I'm safe.

A: No. A memory test with memtest86+ is just a snapshot in time. At that time, when you ran the test, you had the assurance that memory was fine. It could have gone bad right now while you are reading these words. And could be corrupting your data as we speak. So running memtest86+ frequently doesn't really buy you much.

Q: Dit you see that article by Brian Moses?

A: yes, and I disagree with his views, but I really appreciate the fact that he emphasises that you should really be aware of the risks involved and decide for yourself what suits your situation. A few points that are not OK in my opinion:

Every bad stick of RAM I’ve experienced came to me that way from the factory and could be found via some burn-in testing.

I've seen some consumer equipment in my life time that suddenly developed memory errors after years of perfect operation. This is argument from personal anekdote should not be used as a basis for decision making. Remember: memory errors are the norm, not the exception. Even at home. Things break, it's that simple. And having equipment running 24/7 doesn't help.

Furthermore, Brian seems to think that you can mitigate the risk of non-ECC memory by spending money on other stuff, such as off-site backups. Brian himself links to an article that rebutes his position on this. Just for completeness: How valuable is a backup of corrupted data? How do you know which data was corrupted? ZFS won't save you here.

Q: Should I use ZFS on my laptop or desktop?

A: Running ZFS on your desktop or laptop is an entirely different use case as compared to a NAS. I see no problems with this, I don't think this discussion applies to desktop/laptop usage. Especially because you are probably creating regular backups of your data to your NAS or a cloud service, right? If there are any memory errors, you will notice soon enough.

Updates

-

Updated on August 11, 2015 to reflect that ZFS was not designed with ECC in mind. In this regard, it doesn't differ from other file systems.

-

Updated on April 3rd, 2015 - rewrote large parts of the whole article, to make it a better read.

-

Updated on January 18th, 2015 - rephrased some sentences. Changed the paragraph 'Inform people and give them a choice' to argue when it would be reasonable not to use ECC memory. Furthermore, I state more explicitly that ZFS itself has no mechanisms to cope with bad RAM.

-

Updated on February 21th, 2015 - I substantially rewrote this article to give a better perspective on the ZFS + ECC 'debate'.

-

On x64 processors, the size of a word is 64 bits. ↩

-

Windows will generate a "blue screen of death" and Linux will generate a "kernel panic". ↩

-

It is very likely that the computer you're using (laptop/desktop) encountered a memory issue this year, but there is no way you can tell. Consumer hardware doesn't have any mechanisms to detect and report memory errors. ↩

-

Microsoft has performed a study on one million crash reports they received over a period of 8 months on roughly a million systems in 2008. The result is a 1 in 1700 failure rate for single-bit memory errors in kernel code pages (a tiny subset of total memory).

:::text A consequence of confining our analysis to kernel code pages is that we will miss DRAM failures in the vast majority of memory. On a typical machine kernel code pages occupy roughly 30 MB of memory, which is 1.5% of the memory on the average system in our study. [...] since we are capturing DRAM errors in only 1.5% of the address space, it is possible that DRAM error rates across all of DRAM may be far higher than what we have observed. ↩

-

I did not come up with this argument myself. ↩

-

The absolutely facinating concept of bitsquatting proved that hotter datacenters showed more bitflips ↩