This is my new 71 TiB DIY NAS. This server is the successor to my six year old, twenty drive 18 TB NAS (17 TiB). With a storage capacity four times higher than the original and an incredible read (2.5 GB/s)/write (1.9 GB/s) performance, it's a worthy successor.

Purpose

The purpose of this machine is to store backups and media, primarily video.

The specs

| Part | Description |

|---|---|

| Case | Ri-vier RV-4324-01A |

| Processor | Intel(R) Xeon(R) CPU E3-1230 V2 @ 3.30GHz |

| RAM | 16 GB ECC |

| Motherboard | Supermicro X9SCM-F |

| LAN | Intel Gigabit |

| Storage Connectivity | |

| PSU | Seasonic Platinum 860 |

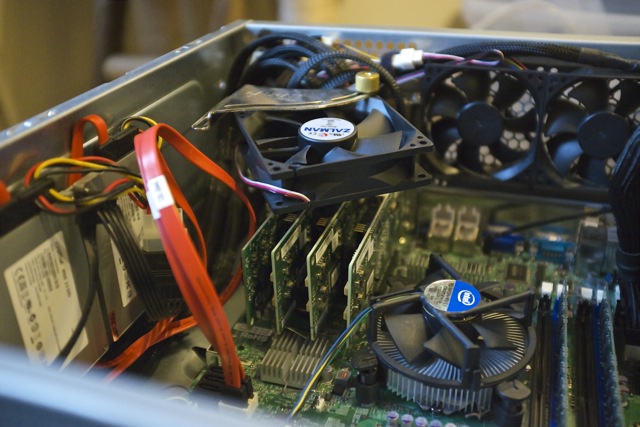

| Controller | 3 x IBM M1015 |

| Disk | 24 x HGST HDS724040ALE640 4 TB (7200RPM) |

| SSD | 2 x Crucial M500 120GB in RAID 1 for boot drives |

| Arrays | Boot: 2 x 120 GB RAID 1 and storage: 18 disk RAIDZ2+ 6 disk RAIDZ2 |

| Brutto storage | 86 TiB (96 TB) |

| Netto storage | 71 TiB (78 TB) |

| OS | |

| Filesystem | ZFS |

| Rebuild time | Depends on amount of data (rate is 4 TB/Hour) |

| UPS | |

| Power usage | about 200 Watt idle |

CPU

The Intel Xeon E3-1230 V2 is not the latest generation but one of the cheapest Xeons you can buy and it supports ECC memory. It's a quad-core processor with hyper-threading.

Here you can see how it performs compared to other processors.

Memory

The system has 16 GB ECC RAM. Memory is relatively cheap these days but I don't have any reason to upgrade to 32 GB. I think that 8 GB would have been fine with this system.

Motherboard

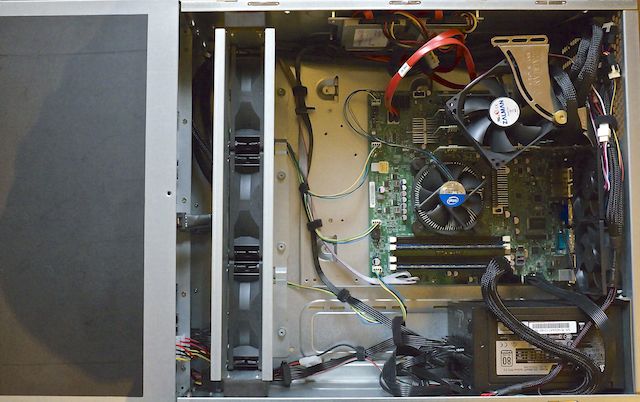

The server is build around the SuperMicro X95SCM-F motherboard.

This is a server-grade motherboard and comes with typical features you might expect, like ECC memory support and out-of-band management (IPMI).

This motherboard has four PCIe slots (2 x 8x and 2 x 4x) in an 8x physical slot. My build requires four PCIe 4x+ slots and there aren't (m)any other server boards at this price point that support four PCIe slots in a 8x sized slot.

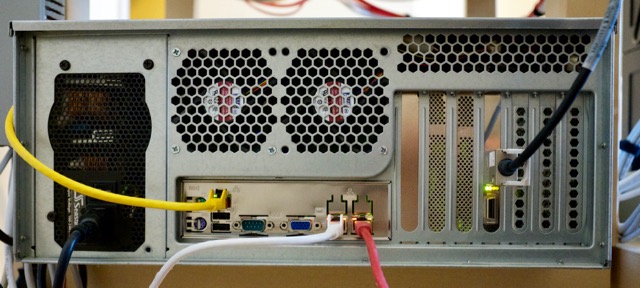

The chassis

The chassis has six rows of four drive bays that are kept cool by three 120mm fans in a fan wall behind the drive bays. At the rear of the case, there are two 'powerful' 80mm fans that remove the heat from the case, together with the PSU.

The chassis has six SAS backplanes that connect four drives each. The backplanes have dual molex power connectors, so you can put redundant power supplies into the chassis. Redundant power supplies are more expensive and due to their size, often have smaller, thus noisier fans. As this is a home build, I opted for just a single regular PSU.

When facing the front, there is a place at the left side of the chassis to mount a single 3.5 inch or two 2.5 inch drives next to each other as boot drives. I've mounted two SSDs (RAID1).

This particular chassis version has support for SPGIO, which should help identifying which drive has failed. The IBM 1015 cards I use do support SGPIO. Through the LSI megaraid CLI I have verified that SGPIO works, as you can use this tool as a drive locator. I'm not entirely sure how well SGPIO works with ZFS.

Power supply

I was using a Corsair 860i before, but it was unstable and died on me.

The Seasonic Platinum 860 may seem like overkill for this system. However, I'm not using staggered spinup for the 24 drives. So the drives all spinup at once and this results in a peak power usage of 600+ watts.

The PSU has a silent mode that causes the fan only to spin if the load reaches a certain threshold. Since the PSU fan also helps removing warm air from the chassis, I've disabled this feature, so the fan is spinning at all times.

Drive management

I've written a tool called lsidrivemap that displays each drive in an ASCII table that reflects the physical layout of the chassis.

The data is based on the output of the LSI 'megacli' tool for my IBM 1015 controllers.

root@nano:~# lsidrivemap disk

| sdr | sds | sdt | sdq |

| sdu | sdv | sdx | sdw |

| sdi | sdl | sdp | sdm |

| sdj | sdk | sdn | sdo |

| sdb | sdc | sde | sdf |

| sda | sdd | sdh | sdg |

This layout is 'hardcoded' for my chassis but the Python script can be easily tailored for your own server, if you're interested.

It can also show the temperature of the disk drives in the same table:

root@nano:~# lsidrivemap temp

| 36 | 39 | 40 | 38 |

| 36 | 36 | 37 | 36 |

| 35 | 38 | 36 | 36 |

| 35 | 37 | 36 | 35 |

| 35 | 36 | 36 | 35 |

| 34 | 35 | 36 | 35 |

These temperatures show that the top drives run a bit hotter than the other drives. An unverified explanation could be that the three 120mm fans are not in the center of the fan wall. They are skewed to the bottom of the wall, so they may favor the lower drive bays.

Filesystem (ZFS)

I'm using ZFS as the file system for the storage array. At this moment, there is no other file system that has the same features and stability as ZFS. BTRFS is not even finished.

The number one design goal of ZFS was assuring data integrity. ZFS checksums all data and if you use RAIDZ or a mirror, it can even repair data. Even if it can't repair a file, it can at least tell you which files are corrupt.

ZFS is not primarily focussed on performance, but to get the best performance possible, it makes heavy usage of RAM to cache both reads and writes. This is why ECC memory is so important.

ZFS also implements RAID. So there is no need to use MDADM. My previous file server was running a single RAID 6 of 20 x 1TB drives. With this new system I've created a single pool with two RAIDZ2 VDEVs.

Capacity

Vendors still advertise the capacity of their hard drives in TB whereas the operating system works with TiB. So the 4 TB drives I use are in fact 3.64 TiB.

The total raw storage capacity of the system is about 86 TiB.

My zpool is the 'appropriate' number of disks (2^n + parity^) in the VDEVs. So I have one 18 disk RAIDZ2 VDEV (2^4+2) and one 6 disk RAIDZ2 VDEV (2^2+2^) for a total of 24 drives.

Different VDEV sizes in a single pool are often not recommended, but ZFS is very smart and cool: it load-balances the data across the VDEVs based on the size of the VDEV. I could verify this with zpool iostat -v 5 and witness this in real-time. The small VDEV got just a fraction of the data compared to the large VDEV.

This choice leaves me with less capacity (71 TiB vs. 74 TiB for RAIDZ3) and also has a bit more risk to it, with the eighteen-disk RAIDZ2 VDEV. Regarding this latter risk, I've been running a twenty-disk MDADM RAID6 for the last 6 years and haven't seen any issues. That does not tell everything, but I'm comfortable with this risk.

Originalyl I was planning on using RAIDZ3 and by using ashift=9 (512 byte sectors) I would recuperate most of the space lost to the non-optimal number of drives in the VDEV. So why did I change my mind? Because the performance of my ashift=9 pool on my 4K drives deteriorated so much that a resilver of a failed drive would take ages.

Storage controllers

The IBM 1015 HBA's are reasonably priced and buying three of them, is often cheaper than buying just one HBA with a SAS expander. However, it may be cheaper to search for an HP SAS expander and use it with just one M1015 and save a PCIe slot.

I have not flashed the controllers to 'IT mode', as most people do. They worked out-of-the-box as HBAs and although it may take a little bit longer to boot the system, I decided not to go through the hassle.

The main risk here is how the controller handles a drive if a sector is not properly read. It may disable the drive entirely, which is not necessary for ZFS and often not preferred.

Storage performance

With twenty-four drives in a chassis, it's interesting to see what kind of performance you can get from the system.

Let's start with a twenty-four drive RAID 0. The drives I use have a sustained read/write speed of 160 MB/s so it should be possible to reach 3840 MB/s or 3.8 GB/s. That would be amazing.

This is the performance of a RAID 0 (MDADM) of all twenty-four drives.

root@nano:/storage# dd if=/dev/zero of=test.bin bs=1M count=1000000

1048576000000 bytes (1.0 TB) copied, 397.325 s, 2.6 GB/s

root@nano:/storage# dd if=test.bin of=/dev/null bs=1M

1048576000000 bytes (1.0 TB) copied, 276.869 s, 3.8 GB/s

Dead on, you would say, but if you divide 1 TB with 276 seconds, it's more like 3.6 GB/s. I would say that's still quite close.

This machine will be used as a file server and a bit of redundancy would be nice. So what happens if we run the same benchmark on a RAID6 of all drives?

root@nano:/storage# dd if=/dev/zero of=test.bin bs=1M count=100000

104857600000 bytes (105 GB) copied, 66.3935 s, 1.6 GB/s

root@nano:/storage# dd if=test.bin of=/dev/null bs=1M

104857600000 bytes (105 GB) copied, 38.256 s, 2.7 GB/s

I'm quite pleased with these results, especially for a RAID6. However, RAID6 with twenty-four drives feels a bit risky. So since there is no support for a three-parity disk RAID in MDADM/Linux, I use ZFS.

Sacrificing performance, I decided - as I mentioned earlier - to use ashift=9 on those 4K sector drives, because I gained about 5 TiB of storage in exchange.

This is the performance of twenty-four drives in a RAIDZ3 VDEV with ashift=9.

root@nano:/storage# dd if=/dev/zero of=ashift9.bin bs=1M count=100000

104857600000 bytes (105 GB) copied, 97.4231 s, 1.1 GB/s

root@nano:/storage# dd if=ashift9.bin of=/dev/null bs=1M

104857600000 bytes (105 GB) copied, 42.3805 s, 2.5 GB/s

Compared to the other results, write performance is way down, although not too bad.

This is the write performance of the 18 disk RAIDZ2 + 6 disk RAIDZ2 zpool (ashift=12):

root@nano:/storage# dd if=/dev/zero of=test.bin bs=1M count=1000000

1048576000000 bytes (1.0 TB) copied, 543.072 s, 1.9 GB/s

root@nano:/storage# dd if=test.bin of=/dev/null bs=1M

1048576000000 bytes (1.0 TB) copied, 400.539 s, 2.6 GB/s

As you may notice, the write performance is better than the ashift=9 or ashift=12 RAIDZ3 VDEV.

In the end I chose to use the 18 disk RAIDZ2 + 6 disk RAIDZ2 setup because of the better performance and to adhere to the standards of ZFS.

I have not benchmarked random I/O performance as it is not relevant for this system. And with ZFS, the random I/O performance of a VDEV is that of a single drive.

Boot drives

I'm using two Crucial M500 120GB SSD drives. They are configured in a RAID1 (MDADM) and I've installed Debian Wheezy on top of them.

At first, I was planning on using a part of the capacity for caching purposes in combination with ZFS. However, there's no real need to do so. In hindsight I could also have used to very cheap 2.5" hard drives (simmilar to my older NAS), which would have cost less than a single M500.

Update 2014-09-01: I actually reinstalled Debian and kept about 50% free space on both M500s and put this space in a partition. These partitions have been provided to the ZFS pool as L2ARC cache. I did this because I could, but on the other hand, I wonder if I'm only really just wearing out my SSDs faster.

Update 2015-10-04: I saw no reason why I would wear out my SSDs as a L2ARC so I removed them from my pool. There is absolutely no benefit in my case.

Networking (updated 2017-03-25)

Current: I have installed a Mellanox MHGA28-XTC InfiniBand card. I'm using InfiniBand over IP so the InfiniBand card is effectively a faster network card. I have a point-to-point connection with another server, I do not have an InfiniBand switch.

I get about 6.5 Gbit from this card, which is not even near the theoretical performance limit. However, this translate into a constant 750 MB/s file transfer speed over NFS, which is amazing.

Using Linux bonding and the quad-port Ethernet adapter, I only got 400 MB/s and transfer speeds were fluctuating a lot.

Original: Maybe I will invest in 10Gbit ethernet or InfiniBand hardware in the future, but for now I settled on a quad-port gigabit adapter. With Linux bonding, I can still get 450+ MB/s data transfers, which is sufficient for my needs.

The quad-port card is in addition to the two on-board gigabit network cards. I use one of the on-board ports for client access. The four ports on the quad-port card are all in different VLANs and not accessible for client devices.

The storage will be accessible over NFS and SMB. Clients will access storage over one of the on-board Gigabit LAN interfaces.

Keeping things cool and quiet

It's important to keep the drive temperature at acceptable levels and with 24 drives packet together, there is an increased risk of overheating.

The chassis is well-equipped to keep the drives cool with three 120mm fans and two strong 80mm fans, all supporting PWM (pulse-width modulation).

The problem is that by default, the BIOS runs the fans at a too low speed to keep the drives at a reasonable temperature. I'd like to keep the hottest drive at about forty degrees Celsius. But I also want to keep the noise at reasonable levels.

I wrote a python script called storagefancontrol that automatically adjusts the fan speed based on the temperature of the hottest drive.

UPS

I'm running a HP N40L micro server as my firewall/router. My APC Back-UPS RS 1200 LCD (720 Watt) is connected with USB to this machine. I'm using apcupsd to monitor the UPS and shutdown servers if the battery runs low.

All servers, including my new build, run apcupsd in network mode and talk to the N40L to learn if power is still OK.

Keeping power consumption reasonable

So these are the power usage numbers.

96 Watt with disks in spin down.

176 Watt with disks spinning but idle.

253 Watt with disks writing.

Edit 2015-10-04: I do have an unresolved issue where the drives keep spinning up even with all services on the box killed, including Cron. So it's configured so that the drives are always spinning. /end edit

But the most important stat is that it's using 0 Watt if powered off. The system will be turned on only when necessary through wake-on-lan. It will be powered off most of the time, like when I'm at work or sleeping.

Cost

The system has cost me about €6000. All costs below are in Euro and include taxes (21%).

| Description | Product | Price | Amount | Total |

| Chassis | Ri-vier 4U 24bay storage chassis RV-4324-01A | 554 | 1 | 554 |

| CPU | Intel Xeon E3-1230V2 | 197 | 1 | 197 |

| Mobo | SuperMicro X9SCM-F | 157 | 1 | 157 |

| RAM | Kingston DDR3 ECC KVR1333D3E9SK2/16G | 152 | 1 | 152 |

| PSU | AX860i 80Plus Platinum | 175 | 1 | 175 |

| Network Card | NC364T PCI Express Quad Port Gigabit | 145 | 1 | 145 |

| HBA Controller | IBM SERVERAID M1015 | 118 | 3 | 354 |

| SSDs | Crucial M500 120GB | 62 | 2 | 124 |

| Fan | Zalman FB123 Casefan Bracket + 92mm Fan | 7 | 1 | 7 |

| Hard Drive | Hitachi 3.5 4TB 7200RPM (0S03356) | 166 | 24 | 3984 |

| SAS Cables | 25 | 6 | 150 | |

| Fan cables | 6 | 1 | 6 | |

| Sata-to-Molex | 3,5 | 1 | 3,5 | |

| Molex splitter | 3 | 1 | 3 | |

| 6012 | ||||

Closing words

If you have any questions or remarks about what could have been done differently feel free to leave a comment, I appreciate it.

Comments