Introduction

I'm aware that most people with intensive storage workloads won't run those workloads on hard drives anymore, that ship has sailed a long time ago. SSDs have taken their place (or 'the cloud').

For those few left who do use hard drives in Linux software RAID setups and run workloads that generate a lot of random IOPS, this may still be relevant.

I'm not sure how much a bitmap affects MDADM software RAID arrays based on solid state drives as I have not tested them.

The purpose of the bitmap

By default, when you create a new software RAID array with MDADM, a bitmap is also configured. The purpose of the bitmap is to speed up recovery of your RAID array in case the array gets out of sync.

A bitmap won't help speed up the recovery from drive failure, but the RAID array can get out of sync due to a hard reset or power failure during write operations.

The performance impact

During some benchmarking of various RAID arrays, I noticed very bad random write IOPS performance. No matter what the test conditions were, I got the random write performance of a single drive, although the RAID array should perform better.

Then I noticed that the array was configured with a bitmap. Just for testing purposes, I removed the bitmap all together with:

mdadm --grow --bitmap=none /dev/md0

Random write IOPs figures improved immediately. This resource explains why:

If the word internal is given, then the bitmap is stored with the metadata

on the array, and so is replicated on all devices.

So when you write data to our RAID array, the bitmap is also constantly updated. Since that bitmap lives on each drive in the array, it's probably obvious that this really deteriorates random write IOPS.

Some examples of the performance impact

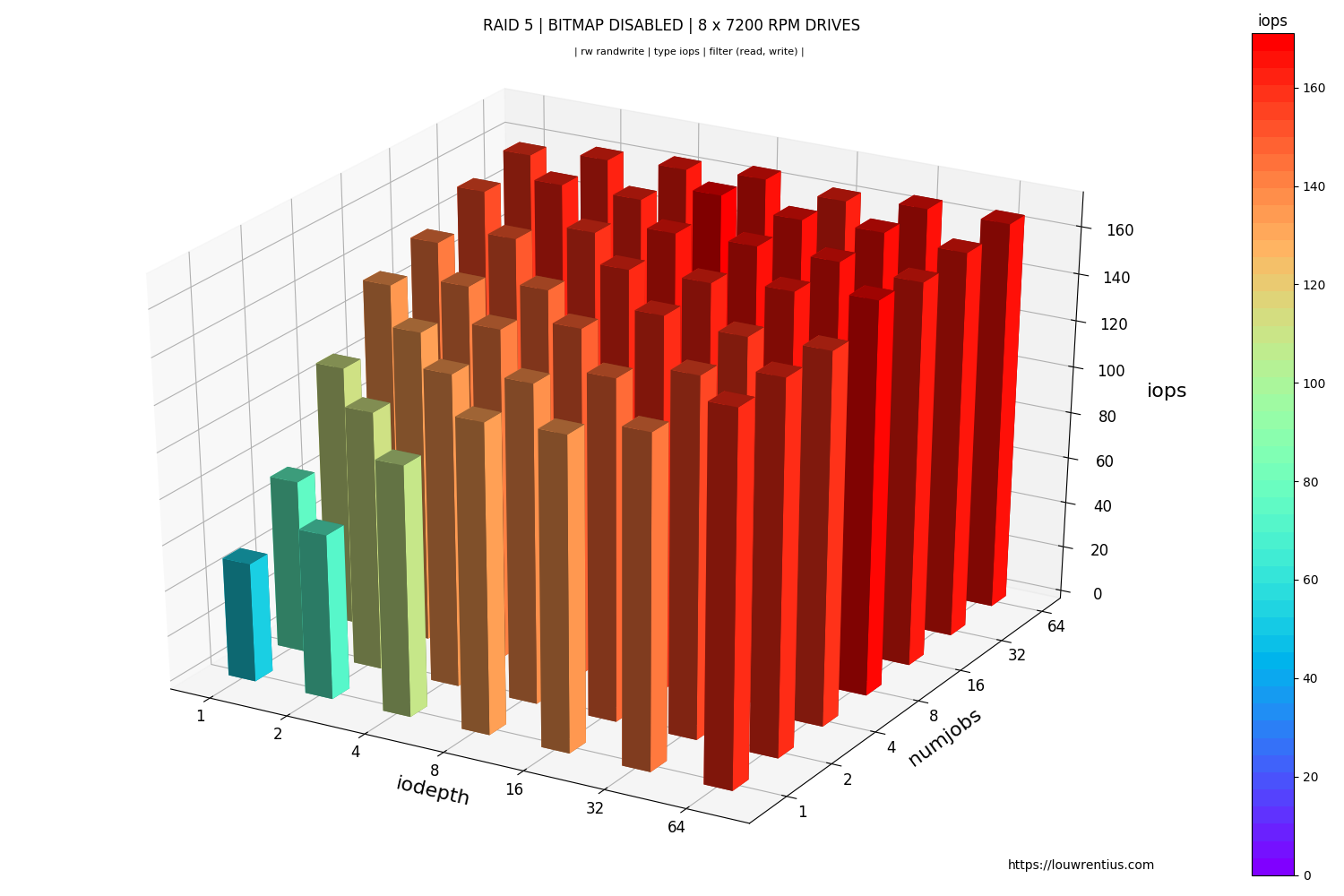

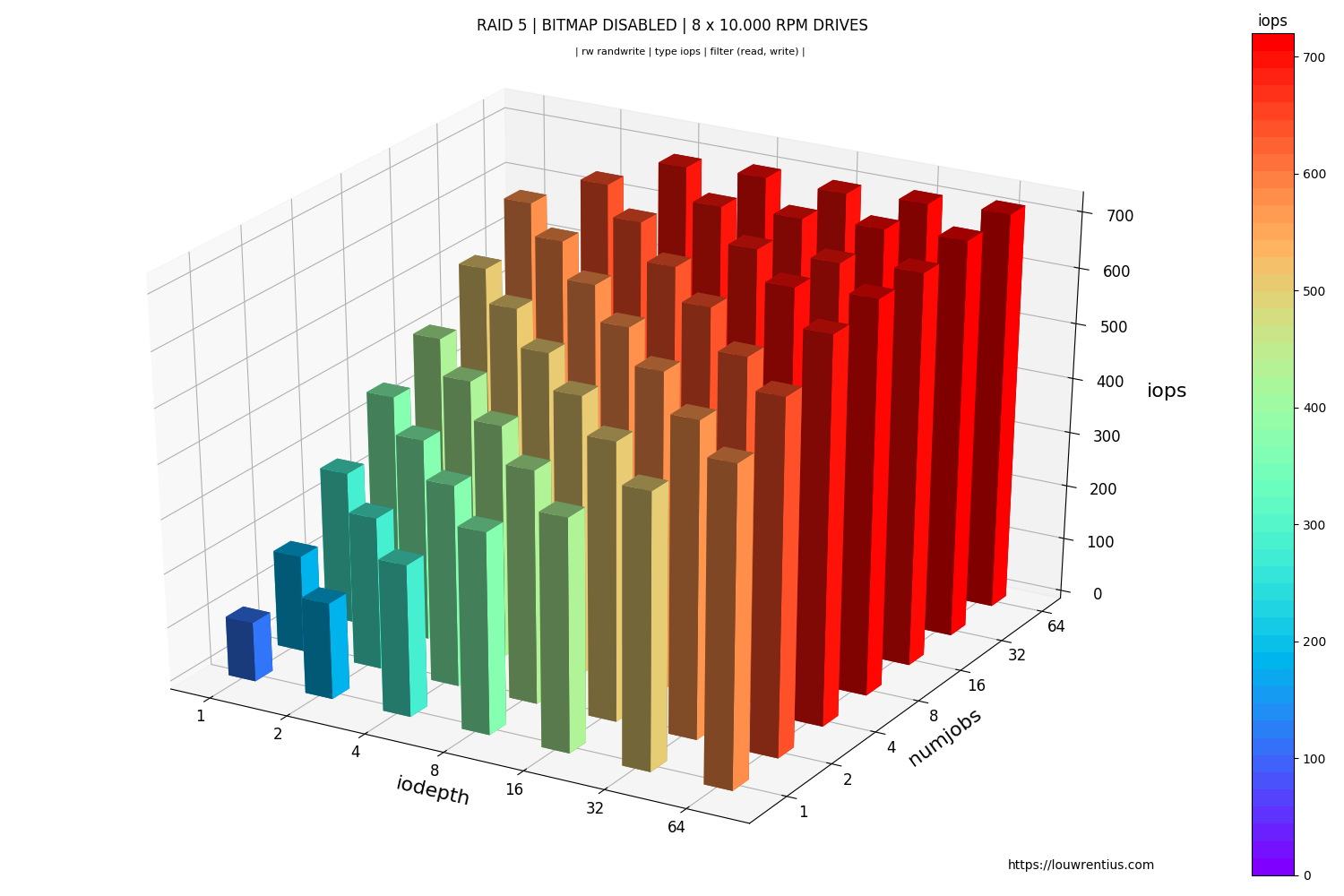

Bitmap disabled

An example of a RAID 5 array with 8 x 7200 RPM drives.

Another example with 10.000 RPM drives:

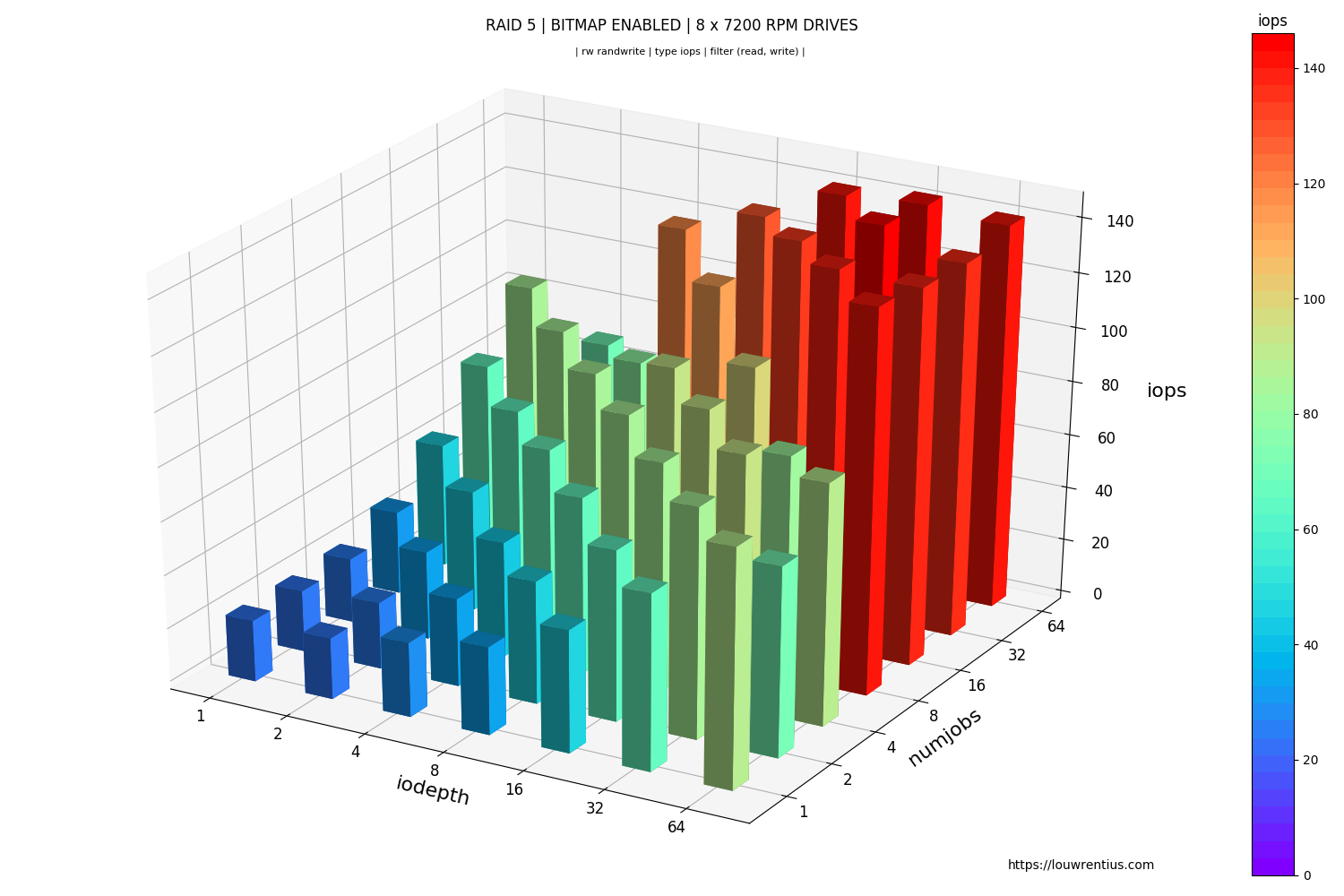

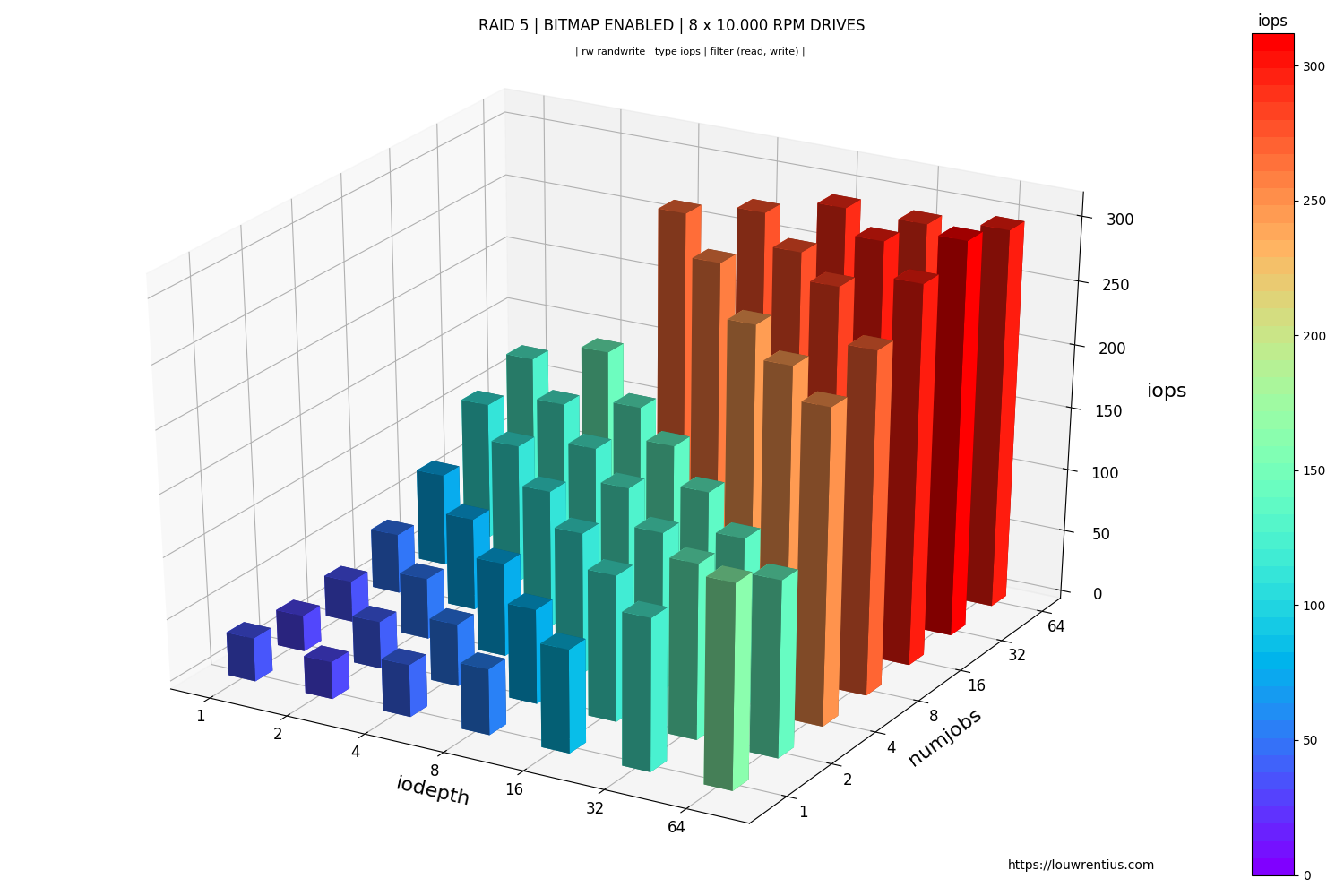

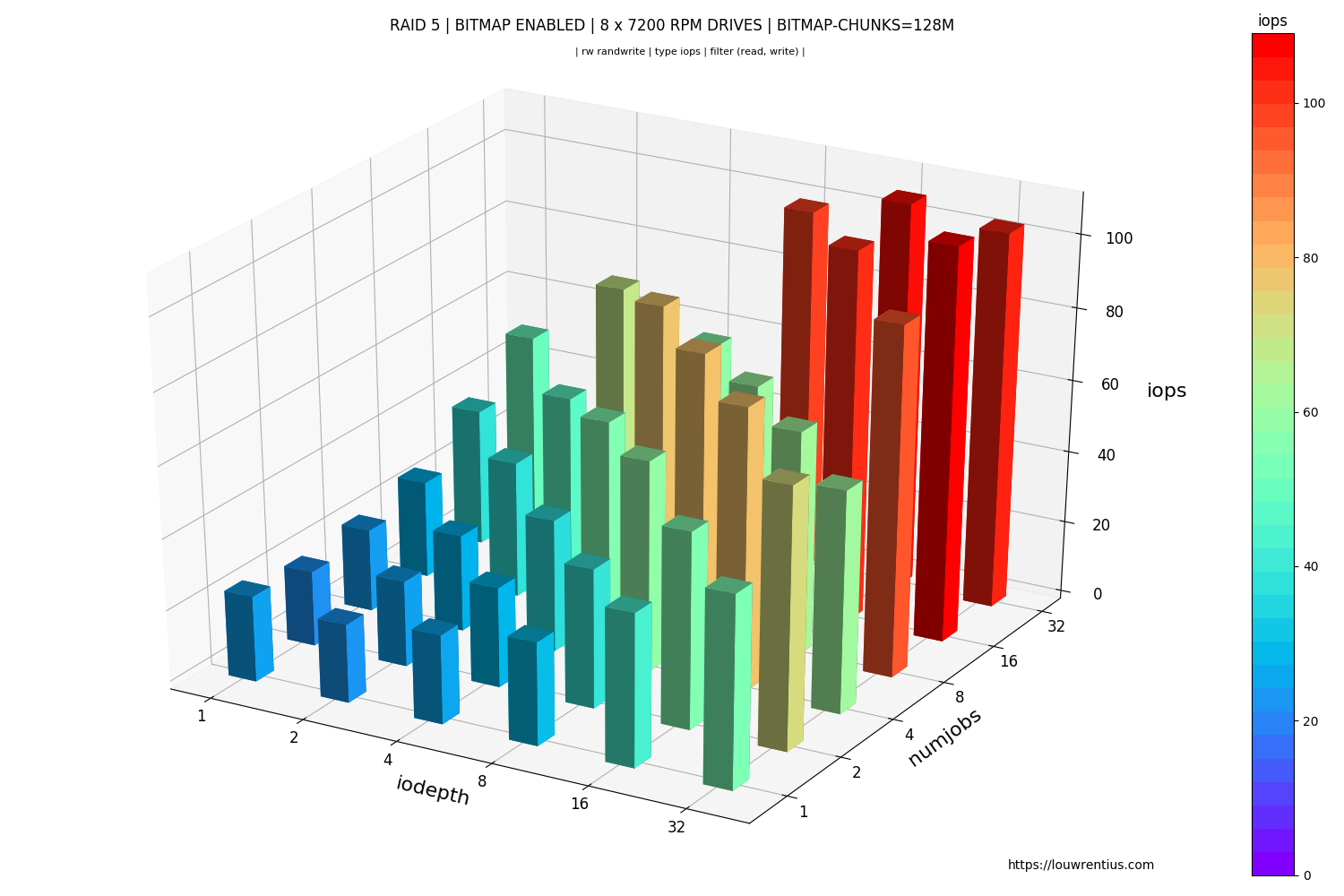

Bitmap enabled (internal)

We observe significant lower random write IOPs performance overall:

Which is also true for 10.000 RPM drives.

External bitmap

You could keep the bitmap and still get great random write IOPS by putting the bitmap on a separate SSD. Since my boot device is an SSD, I tested this option like this:

mdadm --grow --bitmap=/raidbitmap /dev/md0

I noticed excellent random write IOPS with this external bitmap, similar to running without a bitmap at all. An external bitmap has it's own risks and caveats, so make sure it really fits your needs.

Note: external bitmaps are only known to work on ext2 and ext3.

Storing bitmap files on other filesystems may result in serious problems.

Conclusion

For home users who build DIY NAS servers and who do run MDADM RAID arrays, I would recommend leaving the bitmap enabled. The impact on sequential file transfers is negligible and the benefit of a quick RAID resync is very obvious.

Only if you have a workload that would cause a ton of random writes on your storage server would I consider disabling the bitmap. An example of such a use case would be running virtual machines with a heavy write workload.

Update on bitmap-chunks

Based on feedback in the comments, I've performed a benchmark on a new RAID 5 array setting the --bitmap-chunk option to 128M (Default is 64M).

The results seem to be significantly worse than the default for random write IOPS performance.

Comments