Introduction

Back in 2004, I visited a now bankrupt Dutch computer store called MyCom1, located at the Kinkerstraat in Amsterdam. I was there to buy a Western Digital Raptor model WD740, with 74 GB of capacity, running at 10,000 RPM.

When I bought this drive, we were still in the middle of the transition from the PATA interface to SATA2. My raptor hard drive still had a molex connector because older computer power supplies didn't have SATA power connectors.

You may notice that I eventually managed to break off the plastic tab of the SATA power connector. Fortunately, I could still power the drive through the Molex connector.

A later version of the same drive came with the Molex connector disabled, as you can see below.

Why did the Raptor matter so much?

I was very eager to get this drive as it was quite a bit faster than any consumer drive on the market at that time.

This drive not only made your computer start up faster, but it made it much more responsive. At least, it really felt like that to me at the time.

The faster spinning drive wasn't so much about more throughput in MB/s - although that improved too - it was all about reduced latency.

A drive that spins faster3 can complete more I/O operations per second or IOPs4. It can do more work in the same amount of time, because each operation takes less time, compared to slower turning drives.

The Raptor - mostly focussed on desktop applications5 - brought a lot of relief for professionals and consumer enthusiasts alike. Hard disk performance, notably latency, was one of the big performance bottlenecks at the time.

For the vast majority of consumers or employees this bottleneck would start to be alleviated only well after 2010 when SSDs slowly started to become standard in new computers.

And that's mostly also the point of SSDs: their I/O operations are measured in micro seconds instead of milliseconds. It's not that throughput (MB/s) doesn't matter, but for most interactive applications, you care about latency. That's what makes an old computer feel as new when you swap out the hard drive for an SSD.

The Raptor as a boot drive

For consumers and enthusiast, the Raptor was an amazing boot drive. The 74 GB model was large enough to hold the operating system and applications. The bulk of the data would still be stored on a second hard drive either also connected through SATA or even still through PATA.

Running your computer with a Raptor for the boot drive, resulted in lower boot times and application load times. But most of all, the system felt more responsive.

And despite the 10,000 RPM speed of the platters, it wasn't that much louder than regular drives at the time.7.

In the video above, a Raspberry Pi4 boots from a 74 GB Raptor hard drive.

Alternatives to the raptor at that time

To put things into perspective, 10,000 RPM drives were quite common even in 2003/2004 for usage in servers. The server-oriented drives used the SCSI interface/protocol which was incompatible with the on-board IDE/SATA controllers.

Some enthusiasts - who had the means to do so - did buy both the controller8 and one or more SCSI 'server' drives to increase the performance of their computer. They could even get 15,000 RPM hard drives! These drives however, were extremely loud and had even less capacity.

The Raptor did perform remarkably well in almost all circumstances, especially those who mattered to consumers and consumer enthusiasts alike. Suddenly you could get SCSI/Server performance for consumer prices.

The in-depth review of the WD740 by Techreport really shows how significant the raptor was.

The Velociraptor

The Raptor eventually got replaced with the Velociraptor. The Velociraptor had a 2.5" formfactor, but it was much thicker than a regular 2.5" laptop drive. Because it spun at 10,000 RPM, the drive would get hot and thus it was mounted in an 'icepack' to disipate the generated heat. This gave the Velociraptor a 3.5" formfactor, just like the older Raptor drives.

In the video below, a Raspberry Pi4 boots from a 500 GB Velociraptor hard drive.

Benchmarking the (Veloci)raptor

Hard drives do well with sequential read/write patterns, but their performance implodes when the data access pattern becomes random. This is due to the mechanical nature of the device. That random access pattern is where 10,000 RPM outperform their slower turning siblings.

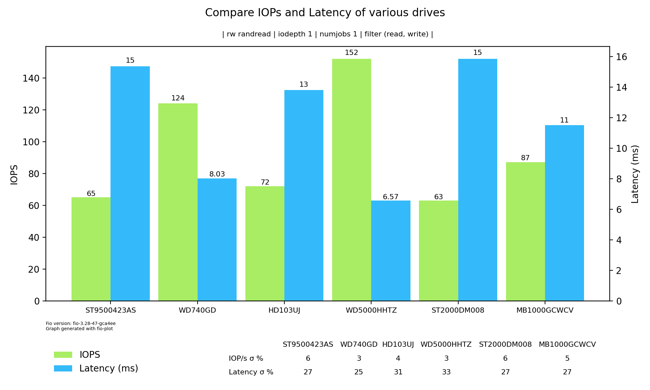

Random 4K read performance showing both IOPs and latency. This is kind of a worst-case benchmark to understand the raw I/O and latency performance of a drive.

| Drive ID | Form Factor | RPM | Size (GB) | Description |

|---|---|---|---|---|

| ST9500423AS | 2.5" | 7200 | 500 | Seagate laptop hard drive |

| WD740GD-75FLA1 | 3.5" | 10,000 | 74 | Western Digital Raptor WD740 |

| SAMSUNG HD103UJ | 3.5" | 7200 | 1000 | Samsung Spintpoint F1 |

| WDC WD5000HHTZ | 2.5" in 3.5" | 10,000 | 500 | Western Digital Velociraptor |

| ST2000DM008 | 3.5" | 7200 | 2000 | Seagate 3.5" 2TB drive |

| MB1000GCWCV | 3.5" | 7200 | 1000 | HP Branded Seagate 1 TB drive |

I've tested the drives on an IBM M1015 SATA RAID card flashed to IT mode (HBA mode, no RAID firmware). The image is generated with fio-plot, which also comes with a tool to run the fio benchmarks.

It is quite clear that both 10,000 RPM drives outperform all 7200 rpm drives, as expected.

If we compare the original 3.5" Raptor to the 2.5" Velociraptor, the performance increase is significant: 22% more IOPs and 18% lower latency. I think that performance increase is due to a combination of the higher data density, the smaller size (r/w head is faster in the spot it needs to be) and maybe better firmware.

Both the laptop and desktop Seagate drives seem to be a bit slower than they should be based on theory. The opposite is true for the HP (rebranded Seagate), which seem to perform better than expected for the capacity and rotational speed. I have no idea why that is. I can only speculate that because the HP drive came out of a server, that the fireware was tuned for server usage patterns.

Closing words

Although the performance increase of the (veloci)raptor was quite significant, it never gained wide-spread adoption. Especially when the Raptor first came to marked, its primary role was that of a boot drive because of its small capacity. You still needed a second drive for your data. So the increase in performance came at a significant extra cost.

The Raptor and Velociraptor are now obsolete. You can get a solid state drive for $20 to $40 and even those budget-oriented SSDs will outperform a (Veloci)raptor many times over.

If you are interested in more pictures and details, take a look at this article.

This article was discussed on Hacker News here.

Reddit thread about this article can be found here

-

Mycom, a chain store with quite a few shops in all major cities in The Netherlands, went bankrupt twice, once in 2015 and finally in 2019. ↩

-

We are talking about the first SATA version, with a maximum bandwidth capacity of 150 MB/s. Plenty enough for hard drives at that time. ↩

-

https://en.wikipedia.org/wiki/Hard_disk_drive_performance_characteristics ↩

-

https://louwrentius.com/understanding-storage-performance-iops-and-latency.html ↩

-

I read that WD intended the first Raptor (34 GB version) to be used in low-end servers as a cheaper alternative to SCSI drives . After the adoption of the Raptor by computer enthusiasts and professionals, it seems that Western Digital pivoted, so the next version - the 74 GB I have - was geared more towards desktop usage. That also meant that this 74 GB model got fluid bearings, making it quieter6. ↩

-

The 74 GB model is actually rather quiet drive at idle. Drive activity sounds rather smooth and pleasant, no rattling. ↩

-

Please note that the first model, the 37 GB version, used ball bearings in stead of fluid bearings, and was reported to be significant louder. ↩

-

Low-end SCSI card were often used to power flatbed scanners, Iomega ZIP drives, tape drives or other peripherals, but in order to benefit from the performance of those server hard drives, you needed a SCSI controller supporting higher bandwidth and those were more expensive. ↩